Topics

Relations and Functions

Relations and Functions

Inverse Trigonometric Functions

Algebra

Calculus

Matrices

- Introduction of Matrices

- Order of a Matrix

- Types of Matrices

- Equality of Matrices

- Introduction of Operations on Matrices

- Addition of Matrices

- Multiplication of a Matrix by a Scalar

- Properties of Matrix Addition

- Properties of Scalar Multiplication of a Matrix

- Multiplication of Matrices

- Properties of Multiplication of Matrices

- Transpose of a Matrix

- Properties of Transpose of the Matrices

- Symmetric and Skew Symmetric Matrices

- Invertible Matrices

- Inverse of a Matrix by Elementary Transformation

- Multiplication of Two Matrices

- Negative of Matrix

- Subtraction of Matrices

- Proof of the Uniqueness of Inverse

- Elementary Transformations

- Matrices Notation

Determinants

- Introduction of Determinant

- Determinants of Matrix of Order One and Two

- Determinant of a Matrix of Order 3 × 3

- Area of a Triangle

- Minors and Co-factors

- Inverse of a Square Matrix by the Adjoint Method

- Applications of Determinants and Matrices

- Elementary Transformations

- Properties of Determinants

- Determinant of a Square Matrix

- Rule A=KB

Vectors and Three-dimensional Geometry

Linear Programming

Continuity and Differentiability

- Concept of Continuity

- Algebra of Continuous Functions

- Concept of Differentiability

- Derivatives of Composite Functions - Chain Rule

- Derivatives of Implicit Functions

- Derivatives of Inverse Trigonometric Functions

- Exponential and Logarithmic Functions

- Logarithmic Differentiation

- Derivatives of Functions in Parametric Forms

- Second Order Derivative

- Derivative - Exponential and Log

- Proof Derivative X^n Sin Cos Tan

- Infinite Series

- Higher Order Derivative

- Continuous Function of Point

- Mean Value Theorem

Applications of Derivatives

- Introduction to Applications of Derivatives

- Rate of Change of Bodies or Quantities

- Increasing and Decreasing Functions

- Maxima and Minima

- Maximum and Minimum Values of a Function in a Closed Interval

- Simple Problems on Applications of Derivatives

- Graph of Maxima and Minima

- Approximations

- Tangents and Normals

Probability

Integrals

- Introduction of Integrals

- Integration as an Inverse Process of Differentiation

- Some Properties of Indefinite Integral

- Methods of Integration: Integration by Substitution

- Integration Using Trigonometric Identities

- Integrals of Some Particular Functions

- Methods of Integration: Integration Using Partial Fractions

- Methods of Integration: Integration by Parts

- Fundamental Theorem of Calculus

- Evaluation of Definite Integrals by Substitution

- Properties of Definite Integrals

- Definite Integrals

- Indefinite Integral Problems

- Comparison Between Differentiation and Integration

- Geometrical Interpretation of Indefinite Integrals

- Indefinite Integral by Inspection

- Definite Integral as the Limit of a Sum

- Evaluation of Simple Integrals of the Following Types and Problems

Sets

- Sets

Applications of the Integrals

Differential Equations

- Differential Equations

- Order and Degree of a Differential Equation

- General and Particular Solutions of a Differential Equation

- Linear Differential Equations

- Homogeneous Differential Equations

- Solutions of Linear Differential Equation

- Differential Equations with Variables Separable Method

- Formation of a Differential Equation Whose General Solution is Given

- Procedure to Form a Differential Equation that Will Represent a Given Family of Curves

Vectors

- Introduction of Vector

- Basic Concepts of Vector Algebra

- Direction Cosines

- Vectors and Their Types

- Addition of Vectors

- Properties of Vector Addition

- Multiplication of a Vector by a Scalar

- Components of Vector

- Vector Joining Two Points

- Section Formula

- Vector (Or Cross) Product of Two Vectors

- Scalar (Or Dot) Product of Two Vectors

- Projection of a Vector on a Line

- Geometrical Interpretation of Scalar

- Scalar Triple Product of Vectors

- Position Vector of a Point Dividing a Line Segment in a Given Ratio

- Magnitude and Direction of a Vector

- Vectors Examples and Solutions

- Introduction of Product of Two Vectors

Three - Dimensional Geometry

- Introduction of Three Dimensional Geometry

- Direction Cosines and Direction Ratios of a Line

- Relation Between Direction Ratio and Direction Cosines

- Equation of a Line in Space

- Angle Between Two Lines

- Shortest Distance Between Two Lines

- Three - Dimensional Geometry Examples and Solutions

- Equation of a Plane Passing Through Three Non Collinear Points

- Intercept Form of the Equation of a Plane

- Coplanarity of Two Lines

- Distance of a Point from a Plane

- Angle Between Line and a Plane

- Angle Between Two Planes

- Vector and Cartesian Equation of a Plane

- Equation of a Plane in Normal Form

- Equation of a Plane Perpendicular to a Given Vector and Passing Through a Given Point

- Distance of a Point from a Plane

- Plane Passing Through the Intersection of Two Given Planes

Linear Programming

Probability

- Introduction of Probability

- Conditional Probability

- Properties of Conditional Probability

- Multiplication Theorem on Probability

- Independent Events

- Bayes’ Theorem

- Variance of a Random Variable

- Probability Examples and Solutions

- Random Variables and Its Probability Distributions

- Mean of a Random Variable

- Bernoulli Trials and Binomial Distribution

- Partition of a sample space

- Theorem of total probability

Notes

If `E_1, E_2 ,..., E_n` are n non empty events which constitute a partition of sample space S, i.e. `E_1, E_2 ,..., E_n` are pairwise disjoint and `E_1 ∪ E_2 ∪ ... ∪ E_n` = S and A is any event of nonzero probability, then

P(Ei|A) =`(P(E_i) P (A | E_i))/( sum_(i=1)^n P(E_j) P(A|E _j ))`

P for any i = 1, 2, 3, ..., n

Proof: By formula of conditional probability, we know that

`P(E_i|A) = (P(A ∩ E_i )) / (P(A))`

`= (P(E_i ) (P(A|E_i )))/ (P(A))` (by multiplication rule of probability)

`= (P(E_i )P(A|E_i ))/ (sum_(j = 1)^n P(E _j)P(A|E_j)) ` (by the result of theorem of total probability)

Remark: The following terminology is generally used when Bayes' theorem is applied. The events `E_1, E_2, ..., E_n` are called hypotheses.

The probability `P(E_i)` is called the priori probability of the hypothesis `E_i`

The conditional probability `P(E_i |A)` is called a posteriori probability of the hypothesis `E_i`.

Bayes' theorem is also called the formula for the probability of "causes". Since the `E_i's` are a partition of the sample space S, one and only one of the events `E_i` occurs (i.e. one of the events `E_i` must occur and only one can occur). Hence, the above formula gives us the probability of a particular Ei (i.e. a "Cause"), given that the event A has occurred.

Video link : https://youtu.be/UVx7q7qN-6k

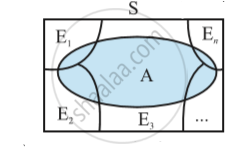

1) Partition of a sample space:

A set of events `E_1, E_2, ..., E_n` is said to represent a partition of the sample space S if

(a) `E_i ∩ E_j = φ, i ≠ j, i, j = 1, 2, 3, ..., n`

(b) `E_1 ∪ Ε_2 ∪ ... ∪ E_n= S` and

(c) `P(E_i) > 0 "for all" i = 1, 2, ..., n.`

In other words, the events `E_1, E_2, ..., E_n` represent a partition of the sample space S if they are pairwise disjoint, exhaustive and have nonzero probabilities.

As an example, we see that any nonempty event E and its complement E′ form a partition of the sample space S since they satisfy E ∩ E′ = φ and E ∪ E′ = S.

2) Theorem of total probability:

Let `{E_1, E_2,...,E_n}` be a partition of the sample space S, and suppose that each of the events `E_1, E_2,..., E_n` has nonzero probability of occurrence. Let A be any event associated with S, then

`P(A) = P(E_1) P(A|E_1) + P(E_2) P(A|E_2) + ... + P(E_n) P(A|E_n)`

= ` sum _(j=1) ^ n P(E_j) P(A|E_j)`

Proof : Given that `E_1, E_2,..., E_n` is a partition of the sample space S in following fig.

Therefore , S =` E_1 ∪ E_2 ∪ ... ∪ E_n` ... (1)

and `E_i ∩ E_j = φ, i ≠ j, i, j = 1, 2, ..., n`

Now, we know that for any event A,

A = A ∩ S

=` A ∩ (E_1 ∪ E_2 ∪ ... ∪ E_n)`

= `(A ∩ E_1) ∪ (A ∩ E_2) ∪ ...∪ (A ∩ E_n)`

Also A ∩ `E_i` and A ∩ `E_j` are respectively the subsets of `E_i` and `E_j`. We know that `E_i` and `E_j` are disjoint, for i ≠ j, therefore, `A ∩ E_i` and `A ∩ E_j` are also disjoint for all i ≠ j, i, j = 1, 2, ..., n.

Thus,

`P(A) = P [(A ∩ E_1) ∪ (A ∩ E_2)∪ .....∪ (A ∩ E_n)]`

= `P (A ∩ E_1) + P (A ∩ E_2) + ... + P (A ∩ E_n)`

Now, by multiplication rule of probability, we have

`P(A ∩ E_i) = P(E_i) P(A|E_i) as P (E_i) ≠ 0 ∀ i = 1,2,..., n`

Therefore, P (A) = `P (E_1) P (A|E_1) + P (E_2) P (A|E_2) + ... + P (E_n)P(A|E_n)`

or `P(A) = sum_(j = 1)^n P(E_j) P(A|E_j)`

Video list :https://youtu.be/_jY8B_0dZgo